The Pentagon and Silicon Valley are within the midst of cultivating a fair nearer relationship because the Division of Protection (DoD) and Huge Tech corporations search to collectively rework the American healthcare system into one that’s “synthetic intelligence (AI)-driven.” The alleged benefits of such a system, espoused by the Military itself, Huge Tech and Pharma executives in addition to intelligence officers, can be unleashed by the quickly growing energy of so-called “predictive medication,” or “a department of medication that goals to determine sufferers liable to growing a illness, thereby enabling both prevention or early remedy of that illness.”

It will apparently be achieved by way of mass interagency knowledge sharing between the DoD, the Division of Well being and Human Providers (HHS) and the personal sector. In different phrases, the army and intelligence communities, in addition to the private and non-private sector parts of the US healthcare system, are working carefully with Huge Tech to “predict” ailments and deal with them earlier than they happen (and even earlier than signs are felt) for the purported goal of enhancing civilian and army healthcare.

This cross-sector staff plans to ship this transformation of the healthcare system by first using and sharing the DoD’s healthcare dataset, which is essentially the most “complete…on this planet.” It appears, nevertheless, based mostly on the packages that already make the most of this predictive strategy and the need for “machine studying” within the improvement of AI know-how, that this partnership would additionally massively broaden the breadth of this healthcare dataset by an array of applied sciences, strategies and sources.

But, if the actors and establishments concerned in lobbying for and implementing this method point out something, it seems that one other—if not major—goal of this push in direction of a predictive AI-healthcare infrastructure is the resurrection of a Protection Superior Analysis Tasks Company (DARPA)-managed and Central Intelligence Company (CIA)-supported program that Congress formally “shelved” a long time in the past. That program, Whole Info Consciousness (TIA), was a submit 9/11 “pre-crime” operation which sought to make use of mass surveillance to cease terrorists earlier than they dedicated any crimes by collaborative knowledge mining efforts between the private and non-private sector.

Whereas the “pre-crime” side of TIA is one of the best recognized part of this system, it additionally included a part that sought to make use of private and non-private well being and monetary knowledge to “predict” bioterror occasions and pandemics earlier than they emerge. This was TIA’s “Bio-Surveillance” program, which aimed to develop “essential data applied sciences and a ensuing prototype able to detecting the covert launch of a organic pathogen mechanically, and considerably sooner than conventional approaches.” Its architects argued it could obtain this by “monitoring non-traditional knowledge sources” together with “pre-diagnostic medical knowledge” and “behavioral indicators.” Whereas ostensibly created to thwart “bioterror” occasions, this system additionally sought to create algorithms for figuring out “regular” illness outbreaks, basically searching for to automate the early detection of both organic assaults or pure pathogen outbreaks, starting from pandemics to presumably different, much less extreme illness occasions.

As beforehand reported by Limitless Hangout, after TIA was terminated by Congress, it largely survived by privatizing its tasks into the corporate often called Palantir, based by Paypal co-founder Peter Thiel and a few of his associates from his time at Stanford College. Notably, the preliminary software program used to create Palantir’s first product was Paypal’s anti-fraud algorithm. Whereas Palantir, for many of its historical past, has not overtly sought to resurrect the TIA Bio-surveillance program, that has now modified within the wake of the Covid-19 disaster.

In late 2022, Palantir introduced that it and the Facilities for Illness Management and Prevention (CDC) would proceed their ongoing work to “plan, handle and reply to future outbreaks and public well being incidents” by streamlining its present biosurveillance packages “right into a singular, environment friendly car” to help the CDC’s “Widespread Working Image.” This “Widespread Working Image” goals to safe “sturdy collaboration throughout the federal authorities, jurisdictional well being departments, personal sector entities, and different key well being companions.”

The CDC and Palantir publicized this partnership simply months after the CDC introduced the creation of the its Heart for Forecasting and Outbreak Analytics (CFA). This workplace now plans to broaden biosurveillance infrastructure by way of public-private partnerships throughout the nation to make sure that native communities always provide federal businesses with a gradual stream of bio-data to develop AI-generated pandemic “forecasts,” or viral outbreak predictions, that may inform pandemic coverage measures throughout pandemics and earlier than they even happen, theoretically earlier than even a single particular person dies of a selected contagion.

On the floor stage, such a mission would possibly sound as if it could serve public well being; if authorities and personal establishments can collaborate to stop pandemics earlier than they occur, nicely then, why not? But, once more, the origins of Palantir display that these “healthcare” surveillance coverage measures truly work utterly in tandem with the deeper, aforementioned “pre-crime” nationwide safety aim of TIA, which highly effective forces have been slowly implementing for many years. The final word aim it appears, is to usher in a brand new, much more invasive surveillance paradigm the place each the exterior surroundings and the general public’s inside surroundings (i.e. our our bodies) are monitored for “errant” indicators.

Palantir’s founder and largest shareholder, Peter Thiel, included the corporate the identical yr of Whole Info Consciousness’s (TIA) shut down—which resulted from distinguished media and political criticism—with vital funding from the CIA’s enterprise capital arm, In-Q-Tel, in addition to direct steerage from the CIA on its product improvement. As Limitless Hangout detailed in its investigation into Donald Trump’s 2024 running-mate J.D. Vance and his rise to MAGA stardom, Thiel and Palantir co-founder Alex Karp met with the pinnacle of TIA at DARPA, John Poindexter, shortly after Palantir’s incorporation.

The intermediary between the tech entrepreneurs and Poindexter was Poindexter’s previous pal and key architect of the Iraq Conflict, Richard Perle, who known as the TIA-head to inform him that he needed him to satisfy “a few Silicon Valley entrepreneurs who had been beginning a software program firm.” Poindexter, in accordance with a report in New York Journal, “was exactly the particular person” with whom Thiel and Karp needed to satisfy, primarily as a result of “their new firm was related in ambition to what Poindexter had tried to create on the Pentagon [that is, TIA], and so they needed to select the mind of the person now extensively considered because the godfather of contemporary surveillance.” Since then, Palantir has been implementing the “pre-crime” initiatives of TIA underneath the quilt of the “free market,” enabled by its place as a personal firm.

This story, together with the CIA’s intimate collaboration in growing Palantir’s early software program, the CIA’s distinctive standing as Palantir’s solely consumer for its first a number of years as an organization and Palantir co-founders’ statements in regards to the firm’s authentic intent (e.g. Alex Karp – CIA analysts had been all the time the meant shoppers of Palantir), display that the corporate was based to denationalise the TIA packages in collaboration with the army and intelligence communities to which Palantir is a significant contractor. Notably, TIA’s survival was truly enabled by its alleged killer, the US Congress, as lawmakers included a labeled annex that preserved funding for TIA’s packages in the identical invoice that ostensibly “killed” the operation.

But whereas it seems that the nationwide safety equipment plans to make use of the approaching AI healthcare system for “pre-crime” and mass surveillance of Americans, this “predictive” strategy to healthcare may also inform vital coverage shifts for the following pandemic. Particularly, the following pandemic will possible make the most of the at present increasing biosurveillance infrastructure and AI illness forecasting software program to develop “focused” coverage measures for particular communities and doubtlessly people throughout future pandemics.

Whereas Palantir stands on the forefront of this technocratic transformation of healthcare, the nationwide safety equipment in collaboration with Huge Healthcare and Huge Tech at massive are all contributing to weaving this lesser recognized “bioterror” part of TIA into personal enterprise schemes that covertly stick with it the duties of the formally “shelved” program. This community of establishments persistently and conveniently omits the origins of its predictive biosurveillance healthcare strategy — however the particular pursuits tied to their efforts, in addition to the placing similarities between their alleged public well being options, and the decades-old biowarfare responses / surveillance packages of the Pentagon, reveal the ulterior motive of this public-private collaboration.

This investigation will look at how the CDC’s Heart for Forecasting and Outbreak Analytics (CFA) signifies a significant step in direction of the “AI-driven healthcare system,” how Palantir’s administration of this system’s knowledge strongly means that this partnership is the newest multi-sector implementation of the “pre-crime” agenda of TIA and what horrifying potentialities the “AI-driven healthcare system” may allow in a future pandemic and healthcare generally. This revolutionary system finally pushes society additional into the sights of a digital panopticon that seeks surveillance and management of all that the makes up the common citizen—from exterior their our bodies, to inside.

What Does the CDC Heart for Forecasting and Outbreak Analytics (CFA) do?

The CFA demonstrates that the AI healthcare and pandemic prevention business is being materially (and quietly) applied into public life in a major approach. Its coverage measures massively broaden invasive surveillance measures and, by sweeping biodata assortment, will rework the best way that public well being coverage coverage is developed and enacted throughout pandemics and healthcare generally.

Based mostly on the CFA and associated developments within the public sector and amongst authorities contractors, American public well being businesses are poised to make the most of the mass assortment of biosurveillance knowledge to gas: 1) focused vaccine improvement and distribution of pathogens with “pandemic potential,” 2) curated coverage and focused lockdowns of particular communities and/or teams based mostly on their “danger ranges” and three) medical prioritization of sufferers based mostly on their AI-determined “wants” and AI hospital administration.

The CFA’s mission is to “advance U.S. forecasting, outbreak analytics, and surveillance capacities associated to illness outbreaks, epidemics, and pandemics to help public well being response and preparedness.” It plans to attain this mission by a number of strategies, however the knowledge aggregation collected by way of Palantir packages throughout the CDC’s “Widespread Working Image”, and the best way this knowledge will manifest into coverage, bind all these methods collectively.

I. Your Information For All

Essential to this effort is the CFA’s aim (arguably its major aim) of making a concrete digital infrastructure that may present a number of sectors and jurisdictions of presidency with the power to share, entry and implement the biodata they acquire. One responsibility assigned to this system’s Workplace of Director summarizes this technique succinctly; it’s tasked with guiding “the facilitation and coordination” of all biosurveillance actions, starting from illness modeling to viral forecasting and the information extraction and assortment essential to help these actions—from the native to federal ranges of presidency and healthcare entities. In easier phrases, the Workplace of Director will be certain that the establishments that make up the CFA (and associate with it) see that this system’s intention to create multi-sector, interagency, collaborative knowledge sharing infrastructure is carried out.

A number of different codified goals of this system clarify how essential this component of mass knowledge sharing is to the general mission of CFA. For instance, the Inform Division is tasked with sharing “well timed, actionable” knowledge with the federal authorities, native leaders, the general public and even worldwide leaders. It additionally coordinates real-time surveillance actions between CDC consultants and US authorities businesses, and maintains “liaison” with CDC officers and employees, different US authorities departments and personal sector companions.

Equally, the Predict Division will develop “scientific collaborations to harmonize analytic approaches and develop instruments,” which possible implies the significance of interoperability in amassing/sharing this knowledge. Interoperability, or “harmonized” analytical approaches and instruments, is a essential part of making the multi-sector collaborative knowledge mining infrastructure that the CFA goals to domesticate. By means of making knowledge and its assortment instruments interoperable, totally different distributors and establishments achieve the power to seamlessly work collectively by enabling the change of information between totally different sources, whether or not they be army, hospitals, tutorial facilities or the rest. In essence, interoperability centralizes a seemingly decentralized community of various distributors and establishments, all of whom are amassing and analyzing knowledge plucked from varied sources.

Likewise, the Workplace of the Director is tasked with sustaining “strategic relationships with tutorial, personal sector, and interagency companions” in addition to procuring “alternatives with business companions.” And at last, the Innovate Department will collaborate “with tutorial, personal sector, and interagency companions” as a part of its aim to create “merchandise, instruments and enterprise enhancements” with a purpose to make pandemic knowledge evaluation “versatile, quick, and scalable for CFA clients together with federal, state, tribal, native, or territorial authorities” (emphasis added). In different phrases, the Innovate Department will interact in cross-sector collaboration for the direct goal of making and enhancing know-how that makes mass knowledge sharing extra huge, speedy and easy for each authorities authorities and “clients.”

Actually, in 2023 this aim materialized with the creation of the CFA’s Perception Internet. It comprises greater than “100 complete community contributors” and spans “24 states and 35 public well being departments.” Its huge community has expanded the CFA’s attain to affect “many vital public well being choices” made on the state and native ranges, and it boasts that its community is built-in and unifying, “leveraging connections with state, native, personal, public, and tutorial companions to create a consortium of collaborators.” This collaboration that Perception Internet facilitates between the private and non-private sector manifests within the lives of residents by the coverage it informs—a central a part of this system.

II. When Information Turns into Coverage

The CFA plans to make the most of this huge array of information to tell real-time coverage choices associated to future pandemic planning and response. A number of divisions throughout the CFA will contribute to this technique of making coverage by the implementation of information into coverage resolution making.

The Workplace of the Director will oversee the overall course of this goal, because it defines “targets and targets for coverage formation, scientific oversight, and steerage in program planning and improvement…” The Workplace of Coverage and Communications will then presumably work to implement these targets into concrete insurance policies and laws, as it’s chargeable for “evaluate[ing], coordinat[ing], and prepar[ing] laws, briefing paperwork, Congressional testimony, and different legislative issues” in addition to coordinating the “improvement, evaluate, and approval of federal laws,” presumably surrounding pandemic coverage, surveillance, knowledge and response efforts.

The Predict Division will play a vital position in informing the specifics of those insurance policies, because it generates “forecasts and analyses to help outbreak preparedness and response efforts”, and collaborates with companions from the native to federal to worldwide stage “on performing analytics to help decision-making.” It would additionally carry out tabletop simulations to “match insurance policies and sources with [its AI-generated] forecasts,” leaving the destiny of communities, referring to their freedom in addition to entry to medical care, within the arms of algorithms and datasets.

Importantly, the CFA is not going to solely make the most of this knowledge in long-term preparation or analysis, however in vital, high-pressure moments. Particularly, the Predict Division’s knowledge units and fashions shall be used “to deal with questions that come up with quick latency.”

Throughout outbreaks, such questions that will come up with “quick latency” would possible relate to containment efforts, and thus, lockdown coverage. The Analytics Response Department of the CFA, which makes use of its “analytical instruments” to assist “resolution making for key companions” each throughout a possible or ongoing outbreak, can also be chargeable for analyzing “illness unfold by present knowledge sources to determine key populations/settings at highest danger” and correspondingly offering “important data to key companions in choices surrounding group migration” (emphasis added).

This sentence, although considerably imprecise, means that AI-informed coverage will topic sure communities/people to a unprecedented stage of intrusion. Particularly, past extra normal, overarching pandemic coverage, it seems that AI-generated forecasts and “danger ranges” will dictate coverage on the native, or maybe even particular person, stage—instantly controlling the motion, or “migration,” of communities.

Certainly, the CFA’s cooperative settlement states that the power to use data-driven, “mathematical” strategies to deal with well being fairness issues within the face of illness outbreaks is “of nice curiosity to the CFA.” Key to this goal is the gathering of information “on the social determinants of well being” to make the most of in illness forecasting. These “social determinants” embody “geography (rural/city), family crowding, employment standing, occupation, earnings, and mobility/entry to transportation,” in addition to race, as long as race just isn’t acknowledged as an “impartial publicity variable” however as an alternative is seen as a “proxy” for different social determinants.

Whereas on the floor stage, this “focused” strategy could seem to offer an answer to the beforehand applied common pandemic coverage, the digitization of lockdowns nonetheless raises the potential to significantly threaten particular person and communal autonomy—solely this time, underneath the auspices of “goal” knowledge, collected and interpreted by AI know-how.

Who’s Behind the AI-Healthcare Push?

The tentacles of the biosecurity equipment unfold throughout a number of sectors of presidency and enterprise, transcending the closely blurred and basically illusory strains between the private and non-private sector and Huge Tech and Huge Pharma. Army officers, tech operatives and international public well being establishments all play a major position within the lobbying for and implementation of this rising healthcare business.

I. The Army

Whereas the concept of growing preemptive vaccines to deal with novel infectious pathogens dates again to the Reagan-era, these concepts initially targeted on growing preemptive vaccines for ailments that emerged in a human inhabitants by way of a bioweapon, making the technique rooted in nationwide safety versus conventional illness response. But within the fashionable period, this militarized strategy to public well being has turn out to be the dominant ideology in institution public well being sectors—demonstrated by the core ideology that the CFA is constructed on.

The CFA’s Workplace of the Director ensures that “the CFA technique is executed by the Predict Division and aligned with total CDC targets” (emphasis added). Whereas the vagueness of this passage omits the precise intentions of the referenced “CDC targets”—the CDC’s nationwide biosurveillance technique for human well being, nevertheless, sheds gentle on the hidden agenda right here.

The technique is cemented in “U.S. legal guidelines and Presidential Directives, together with Homeland Safety Presidential Directive-21 (HSPD-21), ‘Public Well being and Medical Preparedness.’” HSPD-21 is a Bush-era Division of Homeland Safety directive made to “information…efforts to defend a bioterrorist assault” which are additionally “relevant to a broad array of pure and artifical public well being and medical challenges.” The directive aimed to foretell illness outbreaks—pure or bioweapon-induced—by way of “early warning” and “early detection” of “well being occasions.” Strikingly much like the TIA “Bio-Surveillance” targets, these values seem to have been positioned in good arms on the CFA, because the Heart’s director, Dylan George, beforehand served as vice-president of In-Q-Tel, the enterprise capital arm of the CIA.

A latest journey that US Military officers made to Silicon Valley illustrates how the ideology behind this technique has manifested by the connection between Silicon Valley, academia and the Pentagon. On this “pivotal go to” to the San Francisco Bay Space in Aug. 2024, the US Military’s surgeon normal, Mary Okay. Izaguirre, met with scientists at Stanford College and Google to additional “the Military’s efforts to combine cutting-edge know-how and construct stronger ties with civilian sectors.” Izaguirre rendezvoused with Civilian Aides to the Secretary of the Military (CASAs) and Military Reserve Ambassadors to debate “their efforts to bridge the hole between the Military and the civilian group.”

When she met with Stanford scientists, who’ve “an extended historical past of collaboration with the army, significantly by analysis initiatives that contribute to nationwide protection and public well being,” the scientists briefed her on developments made in AI allegedly able to “[revolutionizing] emergency medication.” This tech was a part of Stanford’s, and presumably the army’s and Huge Tech’s, “broader mission to combine AI into varied facets of well being care…”

From there, Izaguirre traveled to Google’s headquarters the place she and the tech consultants mentioned how Google’s “AI, machine studying, and cloud computing capabilities” may help the Military’s healthcare ambitions. She additionally thanked Google for serving to veterans “discover their footing” after their time within the army, acknowledging the position that the corporate’s “SkillBridge” program performs in aiding troopers of their transitions “into civilian careers”—which supplies a handy funnel from the army into Silicon Valley for fortunate servicemen. The article concluded by remarking that by its collaboration with “leaders in academia and know-how, the Military goals to equip its troopers with one of the best instruments and help for the challenges forward.” Notably Google additionally shares a $9 billion cloud computing contract, together with Amazon Internet Providers (AWS), Microsoft and Oracle, with the Pentagon for the army’s Joint Warfighting Cloud Functionality system (JWCC).

This assembly, together with the ever-growing partnerships between Huge Tech and the Pentagon, clearly don’t happen in a vacuum, however as an alternative symbolize a pure fruits of years-long business plans to merge Silicon Valley knowledge with army knowledge. In March 2019, for instance, co-authors Dr. Ryan Kappedal, a former intelligence officer whose job pedigree summarily contains — lead product supervisor for the Pentagon’s Protection Innovation Unit (DIU), knowledge scientist at Johnson & Johnson, and at present a lead supervisor at Google — and Dr. Niels Olson, a US Navy Commander and the Laboratory Director at US Naval Hospital Guam, wrote an article for the Pentagon-funded neoconservative suppose tank, Heart for New American Safety (CNAS), titled “Predictive Medication: The place the Pentagon and Silicon Valley May Construct a Bridge in Synthetic Intelligence,” during which they fantasized in regards to the merging of those industries that Kappedal hails from:

“[With] the Division of Veterans Affairs (VA) healthcare system, the federal authorities has the biggest healthcare system on this planet. Within the period of machine studying, this interprets to essentially the most complete healthcare dataset on this planet. The vastness of the DoD’s dataset mixed with the division’s dedication to primary organic surveillance yields a singular alternative to create one of the best synthetic intelligence–pushed healthcare system on this planet. (emphasis added)”

Whereas the CNAS authors declare that the Pentagon and Silicon Valley merely goal to enhance civilian and army healthcare by this AI healthcare system, this technocratic evolution of healthcare importantly presents a mutually useful alternative for every of those establishments. For the personal sector, because the CNAS article states, the DoD possesses a plethora of information with “intrinsic industrial worth.” For the Pentagon, such a relationship with Silicon Valley would broaden its knowledge mining efforts into the physique, permitting for a wider array of helpful knowledge to make use of for nationwide safety functions.

Additional, implementing a predictive medication infrastructure supplies each sectors with the pretext to amass extra well being knowledge, and to constantly accomplish that, with a purpose to practice the predictive AI know-how. This has already granted the Pentagon the pretext to extend data-collection efforts within the title of making this AI healthcare system, doubtlessly explaining the creation of predictive well being packages reminiscent of ARPA-H and AI forecasting infrastructure just like the CDC’s CFA. Importantly, the biosurveillance subject’s largest advocates even have an extended historical past of stressing the significance of mass interagency knowledge sharing, together with between the private and non-private sectors — highlighting once more the cross-sectoral dedication to using this knowledge for each revenue and nationwide safety.

II. Huge Pharma

Whereas the CNAS authors wrote their “Predictive Medication” article earlier than the Covid-19 pandemic, essentially the most distinguished establishments within the pandemic preparedness / biosurveillance subject have already begun promoting the “predictive” strategy to public well being as the answer to the “subsequent pandemic.” Some of the distinguished facets of predictive well being entails utilizing biosurveillance knowledge to gas the analysis and distribution of medical countermeasures—a coverage that the CDC’s CFA is pursuing:

“[The Analytics Response Branch] works with key companions to tell choices on medical countermeasures throughout an energetic outbreak.”

This coverage unsurprisingly has the backing of the business that the majority clearly stands to achieve essentially the most from it—Huge Pharma. In 2023, scientists from Pfizer’s mRNA Industrial Technique & Innovation division (considered one of whom hails from John Hopkins Bloomberg Faculty of Public Well being) wrote an article titled “Outlook of pandemic preparedness in a post-COVID-19 world” during which they pushed for the utilization of predictive AI know-how to tell real-time coverage throughout the “subsequent pandemic.” The scientists pitched AI-informed coverage choices as the answer to the downsides of common pandemic insurance policies, particularly by a extra “focused” strategy to pandemic coverage.

The paper advocates for the event of preemptive vaccines, that are vaccines developed for viruses that don’t but unfold prominently in human populations. Surveillance knowledge of pathogens with pandemic potential fuels this analysis, because the paper notes that vaccination advantages have “continued to progress” because of the energy of fixed biosurveillance and accelerated manufacturing, demonstrated by the event of “up to date vaccines for evolving variants of SARS-CoV-2.”

Equally, the authors tout the talents of those preemptive vaccines to be rapidly dispersed to guard populations from outbreaks of pathogens with pandemic potential if the pathogen “carefully aligns” with a preemptively developed vaccine stockpile. These preemptive vaccines, nevertheless, would solely provide non permanent safety till “extra tailor-made interventions” had been developed, if deemed essential.

This echoes the lengthy calls of different international well being establishments to develop preemptive vaccines. As a earlier Limitless Hangout investigation reported, the WHO’s 2014 CEPI-partnered program, Analysis and Growth Blueprint for Rising Pathogens (R&D Blueprint) goals to “cut back the time” that vaccines can get to market after the declaration of a pandemic. It does this, nevertheless, not solely by conducting R&D on pathogens that already attain pandemic standing, but in addition by conducting R&D on ailments that “are more likely to trigger epidemics sooner or later.” CEPI itself—began with investments from the Invoice & Melinda Gates Basis and the Wellcome Belief—was based to develop “vaccines towards recognized infectious illness threats that could possibly be deployed quickly to include outbreaks, earlier than they turn out to be international well being emergencies.”

CEPI is at present helping in increase an equipment of analysis and personal corporations pursuing predictive vaccine improvement, who might up finish being a few of the “key companions” that the CFA plans to work with to “inform choices on medical countermeasures throughout an energetic outbreak,” given CEPI’s shut partnership with the Gates Basis by way of the Gates Basis’s Gavi, the Gates Basis’s historical past of funding the CDC and Gates’ potential affect inside CFA (demonstrated later on this article). CEPI made these investments to additional its “100 Days Mission” that goals to “speed up the time taken to develop secure, efficient, globally accessible vaccines towards rising illness outbreaks to inside 100 days.”

Curiously, CEPI claims that the development of a “International Vaccine Library” is essential to the success of its 100 Days Mission. The Library plans to make the most of AI know-how to foretell how “viral threats may mutate to evade our immune methods” with a purpose to determine particular “vaccine targets.” Richard Hatchett, the CEO of CEPI (previously of the US Biomedical Superior Analysis and Growth Authority (BARDA)) acknowledged that constructing the International Vaccine Library would require “coordinated investments in countermeasure improvement and, in outbreak conditions, speedy knowledge sharing.” Maybe the datasets that the CFA will make the most of and broaden may help in creating this International Vaccine Library by making potential the “speedy knowledge sharing” that CEPI requires.

III. Constructing on Tiberius

One other component of informing medical countermeasure coverage by knowledge is distribution—one thing that Palantir gained direct expertise with throughout the COVID-19 pandemic. The CFA now plans to make the most of its knowledge and analytical instruments to tell its “key companions” on “choices on medical countermeasures throughout an energetic outbreak.”

Throughout COVID-19, the Pentagon–run Operation Warp Pace initiated its vaccine distribution coverage in direct collaboration with Palantir by the Palantir program “Tiberius,” which the CDC has since pledged to unite with different Palantir biosurveillance packages as a part of its “Widespread Working Image.” Tiberius makes use of a Palantir software program product known as Gotham that additionally manages one other Palantir-run authorities program known as Well being and Human Providers (HHS) Defend, “a secretive database that hoards data associated to the unfold of COVID-19 gathered from ‘greater than 225 knowledge units, together with demographic statistics, community-based assessments, and a variety of state-provided knowledge.’” The database notably contains protected well being data, which led Democratic senators and representatives to warn of this system’s “critical privateness considerations”:

“Neither HHS nor Palantir has publicly detailed what it plans to do with this PHI, or what privateness safeguards have been put in place, if any. We’re involved that, with none safeguards, knowledge in HHS Defend could possibly be utilized by different federal businesses in surprising, unregulated, and doubtlessly dangerous methods, reminiscent of within the legislation and immigration enforcement context.”

Throughout the pandemic, Tiberius drew on this well being knowledge in order that it may “assist determine high-priority populations at highest danger of an infection.” Tiberius recognized the danger ranges of those populations with a purpose to develop “[vaccine] supply timetables and areas” to prioritize vaccines in particular “in danger” populations. Most frequently these populations had been minority communities and notably, the COVID-19 vaccines are related to an extra danger of great opposed results, and may trigger deadly myocarditis.

Additional, as famous in a earlier Limitless Hangout investigation, intelligence businesses and legislation enforcement businesses, such because the Los Angeles and New Orleans police departments, additionally use Gotham for “predictive policing,” or pre-crime initiatives which disproportionately have an effect on minority communities (ICE additionally used Palantir’s digital profiling tech to apprehend and deport unlawful immigrants). The US Military Analysis Laboratory additionally discovered Gotham helpful, as evidenced by its $91 million contract with Palantir “‘to speed up and improve’ the Military’s analysis work.” Extra just lately, Palantir teamed up with Microsoft to offer nationwide safety leaders with a possibility to use a “first-of-its-kind, built-in suite of know-how,” together with its Gotham software program, amongst different merchandise, for “mission-planning” functions (the army additionally makes use of Gotham for “focusing on enemies” by its “AI-powered kill chain”). These profitable contracts with the intelligence/army state spotlight the dual-use nature of the know-how behind the “AI-healthcare” revolution, and thus increase the query: will Palantir and different authorities businesses make the most of the well being knowledge that CFA can entry for “dual-use,” nationwide safety functions?

The Digitization of Healthcare: Kinsa, Palantir and the ‘Focused’ Nature of Future Pandemic Response

Notably, some distinguished establishments throughout the biosecurity equipment have already begun pitching “focused” pandemic coverage as an answer to the now well known failings of the extra common non-pharmaceutical intervention (NPI) insurance policies of COVID-19, reminiscent of lockdowns, social distancing and faculty closures, which unleashed financial devastation, bodily loss of life and psychological well being decline upon many populations.

As an example, the Pfizer paper, “Outlook of pandemic preparedness in a post-COVID-19 world”, talked about earlier, surmises that the detrimental results of NPIs reminiscent of college closures, lockdowns and hospital insurance policies could also be felt years into the longer term and even be proven to extend in severity with additional research. Lockdowns specifically, the authors be aware, “resulted in vital financial, social, and well being prices,” and so they even state that “the impact of social distancing on the psychological well being of kids and adolescents [continues] to be troublesome to measure.” From a bureaucratic perspective, the paper additionally admits that constant and long-term use of NPIs will be “difficult as a result of folks develop drained and apathetic towards them.”

The answer that the Pfizer scientists provide is “early motion” getting used to “leverage all out there interventions as quickly as potential in pandemic response,” and importantly, “geographically particular and knowledgeable NPI insurance policies.” It seems that not less than one of many options the paper places forth, to each implement “early motion” and “geographically particular” insurance policies, is to “have a gradient of warnings that separate harmful pandemics from extra manageable outbreaks…” This proposed coverage recollects the CFA measure that analyzes “illness unfold by present knowledge sources to determine key populations/settings at highest danger” (emphasis added).

The paper goes on:

“In healthcare settings, a man-made intelligence platform may assist prioritize sufferers based mostly on their medical wants, successfully managing sources throughout triage conditions. Equally, a gradient-based warning system for pandemics may provoke acceptable responses at totally different ranges of risk, with every stage tied to particular actions. An early warning or Degree 1 might contain elevated surveillance and data sharing, whereas increased ranges may set off extra drastic measures like regional shutdowns or international journey restrictions.” (emphasis added)

A system of surveillance this huge, importantly, may solely be achieved by the “the facilitation and coordination” of all biosurveillance actions—from the native to federal ranges of presidency and healthcare entities— that the CFA will perform.

Different Pharma-backed organizations have additionally known as for focused pandemic response coverage, such because the Committee to Unleash Prosperity which acknowledged “figuring out essentially the most weak teams and focusing sources on their safety will all the time be vital to any wise disaster response.” The Committee to Unleash Prosperity is funded by the Pharmaceutical Analysis and Producers of America, whose members embody Pfizer, Johnson & Johnson, Glaxosmithkline, Merck,and Sanofi amongst different Huge Pharma corporations. The group was additionally notably co-founded by Larry Kudlow, previously considered one of Trump’s prime financial advisors and administrators of the Nationwide Financial Council throughout his first time period, who—throughout Covid-19—was a part of the group that determined to successfully outsource the U.S. fiscal reponse to the disaster to Larry Fink’s BlackRock.

The push for such “focused” measures are moreover indicative of a fair higher systemic transformation happening within the healthcare system. The calls to “assist prioritize sufferers based mostly on their medical wants” with a purpose to “successfully handle sources throughout triage conditions” allude to the business effort to digitize hospital administration, useful resource allocation and affected person care, and, in doing so, broaden the well being datasets of the biosecurity equipment. Personal corporations together with Palantir, amongst others, it seems, are already enjoying essential roles on this AI-hospital revolution.

In the meantime, the CFA codifies the push in direction of this AI-system by a number of insurance policies:

“[The Predict Division] assists with tabletop workout routines to match insurance policies and sources with forecasts”

“[The Office of Management Services] supplies course, technique, evaluation, and operational help in all facets of human capital administration, together with workforce and profession improvement and human sources operations”

The primary firm concerned on this shift value noting is Kinsa Well being—an organization that “makes use of internet-connected thermometers to foretell the unfold of the flu”—which is finishing up the type of knowledge mining that will allow this type of predictive and focused pandemic coverage that CFA seeks to hold out. In line with the The New York Occasions, Kinsa is “uniquely positioned to determine uncommon clusters of fever as a result of they’ve years of information for anticipated flu instances in every ZIP code.” Throughout the COVID-19 pandemic, Kinsa was allegedly capable of forecast which areas would turn out to be “COVID-19 epicenters” earlier than extra conventional surveillance methods may.

The thermometers provide knowledge by connecting “to a cellphone app that immediately transmits their readings to the corporate.” Curiously, “Customers may also enter different signs they really feel. The app then offers them normal recommendation on when to hunt medical consideration.”

Within the aftermath of the COVID-19 pandemic, Kinsa has emerged as a rising star throughout the predictive well being business, because it has secured a major cope with healthcare firm Highmark Well being to “predict well being care utilization, acknowledge staffing wants, and plan emergency division and ICU mattress capability when infectious ailments like COVID-19 and influenza spike.” The initiative is “the primary well being supply system to make the most of Kinsa’s early warning system to mannequin staffing wants and mattress capability”—signifying Kinsa’s rising position on this healthcare shift.

This preceded well being know-how firm Wholesome Collectively’s 2024 acquisition of Kinsa, which marked a major step for the thermometer firm, because the acquisition signifies the growth of Kinsa’s predictive powers and datasets into the general public sector. The announcement proclaimed that “the synergy between the 2 corporations will empower pharmaceutical corporations, healthcare suppliers, Medicaid businesses, insurance coverage corporations, and public well being departments with AI-driven instruments to proactively reply to and handle sickness.” The daring imaginative and prescient right here is probably unsurprising—that’s, solely when Wholesome Collectively’s peculiar ties to authorities and Thiel-connected figures, and its bigger imaginative and prescient, are understood.

Wholesome Collectively is a Software program as a Service (SaaS), or a service that “permits customers to connect with and use cloud-based apps over the Web.” It prides itself on unifying “the targets of presidency packages and the wants of residents right into a single platform.” The best way it does that is by its “One Door” strategy, or moderately—its mission to make out there one’s well being data and immunization historical past “multi function place,” that place being their proprietary app. Certainly, Wholesome Collectively has already partnered with the Division of Veterans Affairs (VA) Lighthouse program to entry veteran’s well being knowledge starting from immunization data, “check outcomes, allergy data, scientific vitals, medical situations and appointment data.” This connectivity was achieved by way of the VA’s utility programming interface (API), as veterans utilizing the Wholesome Collectively app entry their medical data by the VA API, which connects totally different laptop packages collectively. This serves as a small-scale instance of the rising harmonization between army and Huge Tech knowledge.

Along with well being knowledge, the corporate additionally goals to hyperlink welfare knowledge and entry to its app—a very regarding characteristic on condition that some US well being consultants tied to the CIA’s In-Q-Tel and official authorities Covid-19 response coverage beforehand pitched linking welfare advantages to vaccination standing throughout the Covid-19 disaster.

When Wholesome Collectively partnered with Amazon Internet Providers (AWS) to hitch its AWS Companion Community (APN), it created a program that achieves this linkage of welfare knowledge with its app. It was known as the “Ladies, Infants, and Kids (WIC) Administration Info System (MIS),” or Luna MIS. WIC is a United States Division of Agriculture (USDA) federal help program that gives low-income pregnant girls and youngsters underneath the age of 5 with providers reminiscent of EBT playing cards to assist them afford meals. Luna MIS apparently streamlines “the administration of WIC advantages, from utility and enrollment to profit issuance and redemption,” that means it transfers customers’ complete interplay with WIC advantages, from registration to allocation, into the Wholesome Collectively app. The corporate additional helps this “One Door” strategy for eligibility, enrollment and recertification for different social packages reminiscent of “Medicaid, SNAP, TANF…in addition to behavioral well being, illness surveillance, very important data, baby welfare and extra.”

Whether or not or not knowledge collected by way of know-how reminiscent of Kinsa thermometers or well being data, reminiscent of immunization standing, would possibly informs one’s eligibility or enrollment for social packages sooner or later stays to be seen. Both approach, the corporate already works instantly on welfare advantages with the Florida Division of Agriculture and Client Providers , the Chickasaw Nation in Oklahoma, Missouri Division of Social Providers, Maryland Market Cash and Maryland Division of Agriculture and extra. Provided that the corporate already collects huge quantities of medical knowledge, together with vaccination data, linking such knowledge to welfare advantages would possible show a simple job for the corporate.

Whereas there’s not a lot public data out there about Wholesome Collectively’s board or funding, it seems that the “One Door” service was born out of one other app—which is not out there—known as Twenty (as a major quantity of Wholesome Collectively’s co-founders/CEOs apparently nonetheless work at Twenty, and maintain the very same positions at every firm).

In line with the Salt Lake Tribune, Wholesome Collectively was developed within the early days of the pandemic when the state of Utah “contracted with cellular app developer Twenty to launch Wholesome Collectively” with a purpose to observe the residents of Utah’s “actions” and, for those who fell sick, equip public well being employees with a digital contact tracing device to find “the place they crossed paths with different customers.” The Tribune reported that Utah supplied Twenty with a $1.75 million contract, together with an extra “$1 million to additional develop [Healthy Together].” In different phrases, Wholesome Collectively was constructed as a public-private “contact tracing” (i.e. surveillance) app.

Twenty, in accordance with its LinkedIn, was an app that aimed “to drive extra human connection” by making it simpler for pals to satisfy up and make plans. It does this, nevertheless, by permitting customers to see the areas of close by pals, even cluing them into their pals’ later plans and pinning occasions for folks to satisfy up at. Whereas Wholesome Collectively and Twenty are separate apps, it seems that the seemingly social location-based monitoring know-how used for Twenty was swiftly repurposed to create the contact-tracing and health-focused app Wholesome Collectively, because the co-founder and co-CEO of Twenty and Wholesome Collectively, Jared Allgood, acknowledged:

“…firstly of the pandemic, we had been contacted by some state governments who’re excited about utilizing a few of the cellular platform know-how that we had constructed beforehand, to create a hyperlink between the well being division and residents of their state…” (emphasis added)

The Salt Lake Tribune defined the contact tracing course of that the repurposed know-how of Wholesome Collectively helped the state obtain:

“the app makes use of Bluetooth and placement tracing providers to document when its customers are in shut proximity. When a person begins to really feel sick, she or he can enter signs on the app, which supplies instructions for testing.”

“State epidemiologist Angela Dunn additional defined the method…‘So in the event you select to share your knowledge with our contact tracers’ through the use of the app, she mentioned, ‘they’ll be capable of know in regards to the locations that you simply’ve been when you had been infectious, and it’ll additionally present our contact tracers with a snapshot of different app customers who you had vital contact with and doubtlessly uncovered with COVID-19 as nicely’…. ‘that may enable contact tracers to comply with up instantly with these folks and supply them details about the way to defend themselves and others,’ she mentioned.”

Now, the targets and capabilities of Wholesome Collectively appear to have expanded into AI viral forecasting and hospital administration with its acquisition of Kinsa, making the personal firm a possible asset for the CDC CFA, as its expertise in working with well being knowledge would seemingly make it a becoming “present knowledge supply” for this system.

The folks behind these corporations too, nevertheless, make Kinsa and Wholesome Collectively not too far faraway from CFA’s different personal sector companions. Wholesome Collectively was funded by SV Angel, the enterprise capital agency based by “The Godfather of Silicon Valley,” Ron Conway, who was an early investor within the Elon Musk-and-Peter Thiel-founded Paypal and in addition within the Peter Thiel-backed Fb (Thiel and Conway had been amongst the earliest backers of the social community).

One other co-founder and co-CEO of Wholesome Collectively and Twenty, Diesel Peltz, boasts attention-grabbing ties to the incoming Trump administration by way of his father, billionaire and chairman emeritus of the Wendy’s Firm, Nelson Peltz. Nelson Peltz claims accountability for re-connecting Elon Musk and Trump, which led to Musk financially and really publicly backing the 2024 Trump marketing campaign. For the reason that election, Musk’s outsized position in setting incoming authorities coverage has turn out to be each apparent and controversial. Selection reported the next in regards to the Peltz household position in uniting Musk with Trump:

“[Peltz] mentioned Musk, along with Peltz’s son Diesel…‘had a breakfast on the home, we invited Donald for breakfast, and so they [Musk and Trump] kind of reunited once more… I hope it’s good, you recognize. I used to be a matchmaker.’” (emphasis added)

Importantly, each Thiel and Musk performed vital roles in efficiently lobbying for the appointment of Thiel protege JD Vance as Trump’s vice presidential nominee. Now, Musk is ready to move Trump’s Division of Authorities Effectivity advisory group, together with the founding father of the biotech firm Roivant (which has created subsidiary biotech corporations with Pfizer, and has invested deeply in mRNA know-how), Vivek Ramaswamy, to “dismantle authorities forms, slash extra laws, minimize wasteful expenditures and restructure federal businesses.”

The assembly between the 2 Peltz males, Musk and the President Elect passed off within the late Spring, and it was just a few months later that Palantir and Wendy’s Provide Chain Co-op introduced a partnership to “convey [the co-op] in direction of a totally built-in Provide Chain Community with alternatives for AI-driven, automated workflows,” by shifting its provide chain onto Palantir’s Synthetic Intelligence Platform. The platform is, familiarly, “designed to attach disparate knowledge sources right into a single frequent working image…” Wendy’s will ultimately use Palantir to handle its provide chain and waste prevention, together with by “Demand Deviation and Allocation.” All of this may push the fast-food firm with an in any other case folksy aesthetic, personified by its ginger-haired freckled mascot, Wendy, in direction of the more and more technocratic new age—and the Peltz household nearer to the Thiel-verse.

Additionally value noting is Arianna Huffington’s seat on the board of Twenty. Huffington’s appointment warrants mentioning solely due to her relationship with one other protege of Peter Thiel, CEO of OpenAI, Sam Altman. The media mogul and tech entrepreneur just lately teamed up to create the health app Thrive AI Well being, which supplies customers a “hyper-personalized” AI well being coach.

Thiel has been described as Altman’s “longtime mentor,” and apparently at the start of Altman’s profession, “Thiel…noticed in Altman a magentic determine who may broaden the tech sector’s strategy internationally.” Thiel’s rosy view of the OpenAI CEO is evidenced by the mutually useful relationship that matured between the 2 after Altman bought his firm Loopt, and Thiel raised the majority of the $21 million {dollars} that Altman later gathered for his personal enterprise capital agency, Hydrazine Capital, in accordance with the The Washington Submit. Quickly after, “Altman’s bond with Thiel blossomed: He helped Thiel’s enterprise agency, Founder’s Fund, get entry to scorching start-ups, and the boys typically traveled collectively to talk at occasions.”

Not too long ago, Palantir and one other Thiel-backed firm, Anduril, have partnered on behalf of the Pentagon to “unlock the total potential of AI for nationwide safety,” particularly by retaining knowledge on the “tactical edge” of the battlefield, knowledge that’s often “by no means retained.” Apparently, this new partnership will make the gathering of this “tactical edge” knowledge potential, and be used to coach AI fashions and “ship the U.S. a bonus over adversaries.” It would additionally allow “collaboration with main AI builders, together with [Sam Altman’s] OpenAI” (emphasis added).

It now appears that Thiel, by the aforementioned relationships, just isn’t too distant in proximity from (although indirectly intertwined with) Wholesome Collectively and Kinsa, all whereas Palantir additional entrenches its relationship with the CDC (in addition to the DoD) and positions itself as a well being knowledge empire.

Kinsa’s Connections to Invoice Gates

Notably, the CEO and founding father of Kinsa, Inder Singh, hails from the Clinton Well being Entry Initiative (CHAI) the place he previously served because the Govt Vice President. CHAI was controversially created with vital involvement from Jeffrey Epstein, the now notorious pedophile, intercourse trafficker and intelligence asset, and Epstein was concurrently concerned with Invoice Gates throughout that very same interval, together with the Gates’ household philanthropy (Epstein was notably an advocate for transhumanism and eugenics, which knowledgeable a lot of his “philanthropic” actions and funding of distinguished scientists). Unsuprisingly, CHAI has been funded by none aside from the Invoice & Melinda Gates Basis to the tune of tens of thousands and thousands of {dollars} (see right here and right here), and in addition shares a virtually an identical aim of vaccinating “as many youngsters as potential” with its associate Invoice Gates’ Gavi, the Vaccine Alliance, by “creating dramatic and sustainable enhancements to vaccine markets and nationwide immunization packages.” The Gates Basis notably envisions AI as central to its international well being targets, because it funded a United States Company for Worldwide Growth (USAID)—a company that always acts as a CIA entrance—effort to push for the international implementation of AI in healthcare.

As a earlier Limitless Hangout investigation famous, Gavi’s acknowledged aim is to create “‘wholesome markets’ for vaccines by ‘encourag[ing] producers to decrease vaccine costs for the poorest nations in return for long-term, high-volume and predictable demand for these nations.’”

And to convey these relationships full circle as soon as once more, Palantir joined “The Trinity Problem” in 2020, “a worldwide coalition of distinguished tutorial establishments and foundations in addition to main know-how, well being and insurance coverage corporations with the goal of accelerating the world’s resilience towards the pandemics of the longer term by harnessing the facility of information, analytics.” Its members included Google, Microsoft, Fb, McKinsey & Firm and—the Gates Basis. The Trinity Problem has been criticized for framing invasive surveillance and neo-Malthusian insurance policies as “options” to the “subsequent” pandemic and as useful for international public well being.

Certainly, the affect of Gates might have navigated its approach into CFA itself, with the CFA director, Dylan George, beforehand serving as vice-president of biotech agency Ginkgo Bioworks. Ginkgo Bioworks, a associate of the World Financial Discussion board, was closely funded by Cascade Funding when the corporate went public, an funding agency managed by Invoice Gates. By using a “constellation” of shell corporations that each one join again to Cascade, Gates additionally collected sufficient property to make himself the largest farmland proprietor in the US throughout the Covid-era. Cascade remains to be the largest shareholder of Ginkgo Bioworks.

It also needs to be famous that Gates helps the United Nation’s (UN) efforts to implement a common Digital ID as a “human proper,” or in actuality, a pre-condition for accessing different human rights, for the whole international inhabitants by 2030 by the UN’s Sustainable Growth Aim 16.9. Beforehand, the EU Digital Covid Certificates enabled governments to, because the Pfizer “Outlook” paper advocates, “prohibit international journey” based mostly on a type of digital ID, that importantly had well being knowledge connected to it (on this case, solely COVID-19 immunization standing, testing outcomes and data of earlier infections.). A number of teams searching for to impose digital ID infrastructure globally had been intimately concerned in digital vaccine passport manufacturing throughout the Covid-19 disaster.

You will need to do not forget that native journey restrictions, or “choices surrounding group migration,” throughout the COVID-19 pandemic had been enforced utilizing each bodily and digital proof-of-vaccination—a type of ID that attaches “well being knowledge” to the ID, with that “well being knowledge” then being utilized to find out one’s accessibility to sure human rights (reminiscent of entry into sure companies/occasions or job entry).

Gates’ funding of the CDC, in addition to his connection to the CFA and this system’s acknowledged coverage goals of analyzing illness unfold to determine the “highest danger” key populations and using knowledge to affect “group migration” rights raises an necessary query: will CFA knowledge be connected to a digital ID, and the way would possibly that knowledge be used to find out one’s human rights (reminiscent of group migration, for instance) throughout a declared, or anticipated, public heath disaster?

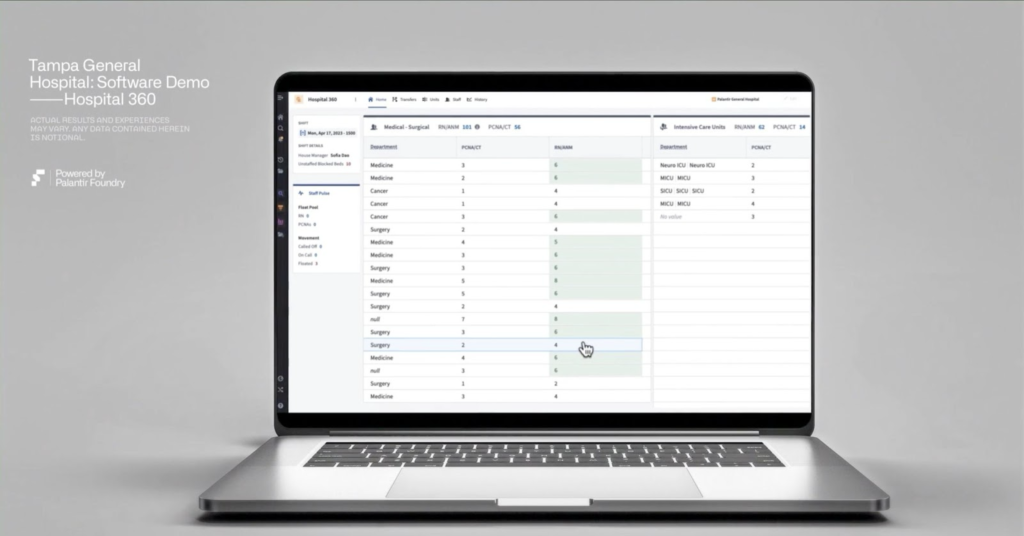

AI Hospitals

Whereas Palantir’s latest transition into AI hospital administration just isn’t an actual illustration of life with digital ID, a few of its options counsel what a future managed by fixed surveillance and AI decision-making would possibly seem like—not solely in healthcare, however the office generally.

In line with its web site’s “Hospitals for Palantir” web page, Palantir is already “powering nurse scheduling, nurse staffing, switch middle optimization, discharge administration, and different vital workflows” for greater than 15% of US hospitals. Palantir’s “healthcare engineers” work “instantly alongside caregivers and hospital operators to construct and tailor workflows — prioritizing velocity, effectiveness, and usefulness.”

The tech firm has “deployed a primary of its sort utility that takes into consideration nurse preferences, granular affected person demand forecasts, employees competencies, and present employees data to mechanically generate AI-driven, optimum nursing schedules,” a seemingly harmless challenge. But, the diploma of affect that this “first of its sort” utility already seems to wield in American hospitals spells a troubling precedent for humanity within the office—particularly, by dehumanizing the logistical and bureaucratic nature of hospitals by AI substitutes underneath the auspices of “goal” machine decision-making.

Palantir’s Foundry is already forecasting “the affected person census for a hospital based mostly on knowledge from the emergency division, working rooms, transfers, discharges, and extra.” The tech methods additionally preserve observe of the talents and data for each nurse in a hospital, “together with (however not restricted to) competencies, languages, abilities, certifications, tenure, and different expertise profile data.” Each varieties of information apparently generate the prime nursing schedule for the whole hospital, right down to any given “unit, ground, division, [or] facility.”

Whereas these methods challenge the picture of an altruistic product geared toward offering a extra seamless expertise for sufferers and hospital employees alike, vital media scholar Dr. Nolan Higdon, co-author of the guide “Surveillance Schooling” which explores the invasive nature of surveillance applied sciences in faculties (in addition to the intersection between Huge Tech and the army industrial advanced), instructed Limitless Hangout that Huge Tech corporations recycle this altruism-pitch time and time once more as a option to masks their ulterior motives:

“Each time these corporations make use of knowledge assortment mechanisms underneath the auspices of enhancing the lives of shoppers, it finally finally ends up being a scheme to earn more money, and on the expense of labor and the shopper.

What we’ve seen persistently again and again is no matter readout they get of the information finally ends up being an excuse to chop jobs, to overwork people, to reduce providers and entry to providers as a option to minimize prices…So it’s: if we acquire knowledge, how rather more work can we throw on the again of this nurse? Can we throw sufficient work on the again of this nurse the place sufferers will complain and we will minimize one other job or two? These are the type of questions that this knowledge is making an attempt to assist people make.”

But Higdon fears that this aim of accelerating earnings may additionally allow much more vicious worth gouging of sufferers that the healthcare business already engages in with little transparency. Palantir claims that its tech can suggest in actual time the place a hospital ought to place incoming sufferers based mostly on “patient-specific standards” and “present and upcoming [hospital] capability,” which clearly would require entry to a breadth of affected person knowledge. Higdon wonders whether or not or not insurance coverage suppliers would possibly weaponize this knowledge towards sufferers by elevating their premiums based mostly on life choices of the affected person:

“Not everyone is completely trustworthy with their insurer about possibly how a lot they sleep or how a lot they drink or how a lot they occasion or no matter, proper? These instruments is usually a option to surveil folks to seek out that data out to set premiums which are geared toward maximizing the amount of cash you get from clients and save the amount of cash for the insurance coverage firm.

The extra they find out about your life, the extra justifications they’ll make about setting premiums. Perhaps of their algorithmic counts, in the event you sleep six hours an evening as an alternative of eight hours an evening, you’re extra more likely to have these well being outcomes. So that they’re going to cost you extra money now till your sleep patterns change. Or possibly you eat X quantity of processed meals and that’s been related to this final result. So that they’re going to cost extra on this premium.

There’s so [much] ‘within the weeds’ proof that can be utilized towards people, and also you don’t have any recourse. As a result of once more, they return to, ‘nicely the target algorithm has given us this readout.’”

Whereas Palantir vows that it retains “affected person privateness and data governance a prime precedence,” guarantees like these merely present tech corporations smokescreens to obfuscate the huge quantity of information sharing they interact in. Higdon claims that whereas many corporations, like Palantir, promise customers that they don’t promote consumer knowledge, these corporations nonetheless share it between establishments they’ve entered agreements with. On Palantir’s Medium Weblog, for instance, it vows to readers that it doesn’t promote or share its knowledge with different clients…that’s, “besides the place these particular shoppers have entered into an settlement with one another.”

Nevertheless, whether or not or not this is applicable particularly to Palantir’s first and longest-running consumer, the CIA, stays uncertain. Many tech corporations, significantly social media giants and serps, had been revealed in previous years to illegally share person knowledge with US intelligence to facilitate huge, post-9/11 surveillance packages of doubtful legality. Importantly, at Palantir’s origins its founders collaborated with the intelligence state to resurrect a DARPA-CIA surveillance program that sought to merge present databases into one “digital, centralized, grand database.” Given this, it appears greater than believable that Palantir permits US intelligence to entry extra of the information the corporate handles than they publicly acknowledge.

Palantir additionally creates profiles of Americans for the CIA based mostly on their on-line actions (and different actions which are surveillable). If Higdon’s considerations of information sharing do certainly apply to Palantir, then Palantir may simply fold its trove of American well being knowledge into such profiles. Actually, the CFA requires organizations making use of to turn out to be companions of this system to explain how they plan to leverage novel knowledge sources “to create new analytic merchandise.” An instance they supply for candidates entails utilizing “knowledge fusion strategies” to merge knowledge extracted from the web with “present public well being knowledge streams” with a purpose to create detailed forecasts of current (or future) occasions that “cut back latency.”

That is significantly troubling given Palantir’s position in implementing “predictive policing”, i.e. pre-crime, in the US and that legislation enforcement and intelligence businesses may weaponize psychological well being knowledge specifically within the context of stopping crimes earlier than they happen. Whereas some might deem this state of affairs far-fetched, it’s value contemplating that the earlier Trump administration carefully thought of a coverage to make use of AI to investigate harmless Individuals’ social media profiles for posts that would point out “early warning indicators of neuro-psychiatric violence” as a method of stopping mass shootings earlier than they happen. Per that program, the federal government would topic Individuals flagged by the AI to numerous mandated psychological well being interventions or preventive home arrest. A state of affairs during which legislation enforcement makes use of psychological well being knowledge from healthcare settings tied to Palantir in lieu of, or together with, social media posts just isn’t troublesome to ascertain.

Additional, whereas Palantir claims to make affected person privateness a “prime precedence,” regulatory our bodies have but to enact any significant oversight of the corporate to stop it from sharing this knowledge with different organizations, a lot much less itself and thus the opposite branches of presidency it actively works with. This lack of transparency creates a “corridor of mirrors” that blurs the strains between organizations, and due to this fact who owns what knowledge, covertly eliminating any rights to privateness whereas on the similar time enabling the company building of a digitized international consciousness made up of the information of unknowing civilians—on this case, all within the title of “public well being.”

The Corridor of Mirrors

The CDC CFA’s alleged dedication to make the most of groundbreaking strategies to raised public well being stays to be seen. But, what this text definitively illustrates is that the CFA additional entrenches each the private and non-private wings of the general public well being equipment into the “corridor of mirrors” of intelligence agency-connected firms and public establishments. Behind these organizations sit a few of the most influential kingmakers of Washington, hailing from Silicon Valley, seemingly dedicated to using any business or disaster to broaden their surveillance of human our bodies, equipping them with the capital to turn out to be the robber barons of the digital age.

Importantly, nevertheless, the CFA doesn’t signify a shift in public well being coverage, however moderately a agency step ahead in a years-long effort to drive the whole public well being equipment into the arms of hawkish nationwide safety ideologues and their oligarchic, technocratic benefactors. For regular folks, the implications of such coverage pursuits could also be vital. Throughout the “future pandemics” that this whole business is already spending billions of {dollars} making ready for—with anticipated returns in thoughts—the CFA’s surveillance might dictate the common civilians’ international journey rights, even their capacity to traverse their very own communities, what medicines they take/have entry to and whether or not they’re deemed “excessive danger” or not.

The actors behind this method are unsurprisingly the identical ones that deliberate, directed and carried out the COVID-19, halfway-digitized, iterations of comparable biosecurity coverage. The fingerprints of figures like Gates, with the pinnacle of the CFA hailing from the Gates-funded Gingko Bioworks, and people of Huge Pharma and the Pentagon are plastered all around the program’s doctrine.

Critically, this system’s existence ought to be thought of throughout the context of the approaching Trump administration, which boasts deep ties to its most distinguished figures. The Thiel-verse have exerted their affect over D.C. politics correctly, demonstrated not solely by the plethora of presidency contracts gained by Thiel-connected corporations throughout businesses, however by the infiltration of Thiel proxies like Founders Fund alumnus J.D. Vance into Trump’s cupboard.

As Stavroula Pabst just lately famous in Accountable Statecraft, Thiel “bankrolled fellow enterprise capitalist and now-VP elect J.D. Vance’s profitable 2022 Senate Marketing campaign in Ohio to the tune of $15 million — essentially the most anybody has given to a Senate candidate. Thiel and Vance are actually long run associates, the place Thiel beforehand assisted Vance’s personal enterprise capital profession.” Whereas Trump ended up selecting the billionaire nationwide safety contractor billionaire Stephen Feinberg as his Deputy Secretary of Protection, he was eyeballing Trae Stephens for the place, previously of Palantir and a “longtime associate at Thiel’s Founders Fund and co-founder and Govt Chairman at Anduril,” additional demonstrating that the connection between Thiel and Trump continues to endure. As well as, one other Thiel proxy – Jim O’Neill, who boasts deep ties to mRNA tech – has been nominated to be the No. 2 at HHS and can possible function HHS Secretary if the Senate rejects the affirmation of Robert F. Kennedy Jr. O’Neill’s upcoming position at HHS heralds not solely a continuation however a possible deepening of Palantir’s involvement at HHS sub-agencies just like the CDC.

Corporations reminiscent of Kinsa and Wholesome Collectively stand as well-positioned potential benefactors of this Thiel-friendly relationship with the approaching Trump administration, not solely due to the connections Diesel Peltz boasts to PayPal Mafia member Elon Musk, Trump himself and early PayPal investor Ron Conway, however as a result of its merchandise have made it a distinguished data-miner on the intersection of healthcare and Huge Tech. From this attitude, a myriad of different corporations together with protection contractors Amazon Internet Providers, Microsoft and Google, sit in an analogous place.

Precisely which corporations shall be tasked to satisfy sure obligations stays to be seen, however the agenda-at-large stays the identical; massively enhance the surveillance powers of this biosurveillance equipment, after which make the most of these powers to affect public coverage, enhance management of civilian motion and entry to rights, safe deregulated markets for biotechnology and, most significantly, make the whole lot in regards to the particular person civilian topic to the surveillance, and scrutiny, of the shadowy organizations occupying the watchtower of the digital panopticon.

The privatization, and thus on-the-surface “decentralization” of this program grants it the looks of the pure evolution of the free market. But Palantir’s origins within the DARPA/CIA Whole Info Consciousness (TIA) program, in addition to the merging of all three of those sectors and the clear positive aspects all stand to attain, counsel a extra organized and cynical pursuit of the insurance policies that CFA seems to be making actuality. Collectively, these industries kind a technocratic iteration of the Mighty Wurlitzer. Enjoying specified tunes to focused audiences, whether or not they be the altruistic notions of public well being, the horrifying potentials of unchecked home terrorism or bioterrorism, the disaster of world pandemics and even easy office effectivity, every melody this equipment performs serves to fabricate consent for his or her capacity to conduct ever-expanding surveillance of everybody. This clearly makes the declared “public well being” functions of the biosurveillance equipment at massive extremely questionable.

In spite of everything, the AI-healthcare system guarantees a extra environment friendly, handy and efficient healthcare system—but the means by which this method is supposed to guide the general public to a predictive-health utopia contain the elimination of privateness and the dehumanization of healthcare itself. Left to algorithms managed by company sharks and nationwide safety hawks, earnings, surveillance and top-down affect are an all however assured final result, however what’s going to the digitization of care do to the bodily, psychological and non secular well being of everybody else? Maybe these folks—past the information that firms can extract from them—are an afterthought of these behind the AI-healthcare revolution.

.png)