From miles away throughout the desert, the Nice Pyramid appears to be like like an ideal, clean geometry — a glossy triangle pointing to the celebs. Stand on the base, nevertheless, and the phantasm of smoothness vanishes. You see huge, jagged blocks of limestone. It’s not a slope; it’s a staircase.

Bear in mind this the subsequent time you hear futurists speaking about exponential development.

Intel’s co-founder Gordon Moore (Moore's Regulation) is famously quoted for saying in 1965 that the transistor rely on a microchip would double yearly. One other Intel government, David Home, later revised this assertion to “compute energy doubling each 18 months." For some time, Intel’s CPUs have been the poster baby of this legislation. That’s, till the expansion in CPU efficiency flattened out like a block of limestone.

If you happen to zoom out, although, the subsequent limestone block was already there — the expansion in compute merely shifted from CPUs to the world of GPUs. Jensen Huang, Nvidia’s CEO, performed an extended recreation and got here out a robust winner, constructing his personal stepping stones initially with gaming, then pc visioniand not too long ago, generative AI.

The phantasm of clean development

Know-how development is filled with sprints and plateaus, and gen AI just isn’t immune. The present wave is pushed by transformer structure. To cite Anthropic’s President and co-founder Dario Amodei: “The exponential continues till it doesn’t. And yearly we’ve been like, ‘Nicely, this may’t probably be the case that issues will proceed on the exponential’ — after which yearly it has.”

However simply because the CPU plateaued and GPUs took the lead, we’re seeing indicators that LLM development is shifting paradigms once more. For instance, late in 2024, DeepSeek shocked the world by coaching a world-class mannequin on an impossibly small price range, partly through the use of the MoE method.

Do you bear in mind the place you lately noticed this system talked about? Nvidia’s Rubin press launch: The expertise contains “…the newest generations of Nvidia NVLink interconnect expertise… to speed up agentic AI, superior reasoning and massive-scale MoE mannequin inference at as much as 10x decrease price per token.”

Jensen is aware of that attaining that coveted exponential development in compute doesn’t come from pure brute drive anymore. Typically you want to shift the structure fully to put the subsequent stepping stone.

The latency disaster: The place Groq suits in

This lengthy introduction brings us to Groq.

The largest features in AI reasoning capabilities in 2025 have been pushed by “inference time compute” — or, in lay phrases, “letting the mannequin suppose for an extended time period.” However time is cash. Shoppers and companies don’t like ready.

Groq comes into play right here with its lightning-speed inference. If you happen to carry collectively the architectural effectivity of fashions like DeepSeek and the sheer throughput of Groq, you get frontier intelligence at your fingertips. By executing inference quicker, you may “out-reason” aggressive fashions, providing a “smarter” system to clients with out the penalty of lag.

From common chip to inference optimization

For the final decade, the GPU has been the common hammer for each AI nail. You employ H100s to coach the mannequin; you employ H100s (or trimmed-down variations) to run the mannequin. However as fashions shift towards "System 2" considering — the place the AI causes, self-corrects and iterates earlier than answering — the computational workload modifications.

Coaching requires huge parallel brute drive. Inference, particularly for reasoning fashions, requires quicker sequential processing. It should generate tokens immediately to facilitate complicated chains of thought with out the consumer ready minutes for a solution. Groq’s LPU (Language Processing Unit) structure removes the reminiscence bandwidth bottleneck that plagues GPUs throughout small-batch inference, delivering lightning-fast inference.

The engine for the subsequent wave of development

For the C-Suite, this potential convergence solves the "considering time" latency disaster. Take into account the expectations from AI brokers: We wish them to autonomously ebook flights, code complete apps and analysis authorized precedent. To do that reliably, a mannequin may have to generate 10,000 inner "thought tokens" to confirm its personal work earlier than it outputs a single phrase to the consumer.

On a regular GPU: 10,000 thought tokens may take 20 to 40 seconds. The consumer will get bored and leaves.

On Groq: That very same chain of thought occurs in lower than 2 seconds.

If Nvidia integrates Groq’s expertise, they remedy the "ready for the robotic to suppose" drawback. They protect the magic of AI. Simply as they moved from rendering pixels (gaming) to rendering intelligence (gen AI), they’d now transfer to rendering reasoning in real-time.

Moreover, this creates a formidable software program moat. Groq’s greatest hurdle has at all times been the software program stack; Nvidia’s greatest asset is CUDA. If Nvidia wraps its ecosystem round Groq’s {hardware}, they successfully dig a moat so vast that opponents can’t cross it. They might provide the common platform: One of the best surroundings to coach and essentially the most environment friendly surroundings to run (Groq/LPU).

Take into account what occurs if you couple that uncooked inference energy with a next-generation open supply mannequin (just like the rumored DeepSeek 4): You get an providing that will rival right this moment’s frontier fashions in price, efficiency and pace. That opens up alternatives for Nvidia, from straight getting into the inference enterprise with its personal cloud providing, to persevering with to energy a rising variety of exponentially rising clients.

The following step on the pyramid

Returning to our opening metaphor: The "exponential" development of AI just isn’t a clean line of uncooked FLOPs; it’s a staircase of bottlenecks being smashed.

Block 1: We couldn't calculate quick sufficient. Answer: The GPU.

Block 2: We couldn't practice deep sufficient. Answer: Transformer structure.

Block 3: We will't "suppose" quick sufficient. Answer: Groq’s LPU.

Jensen Huang has by no means been afraid to cannibalize his personal product strains to personal the long run. By validating Groq, Nvidia wouldn't simply be shopping for a quicker chip; they’d be bringing next-generation intelligence to the lots.

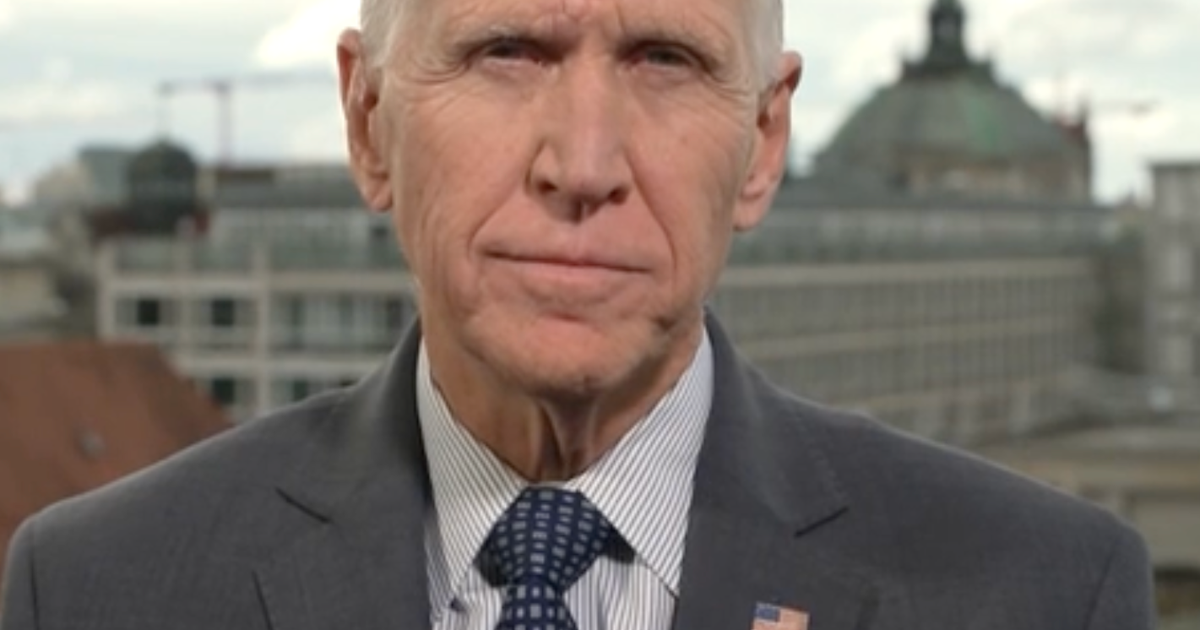

Andrew Filev, founder and CEO of Zencoder

![[Time Trowel] When ‘conventional’ weddings put tradition on show [Time Trowel] When ‘conventional’ weddings put tradition on show](https://www.rappler.com/tachyon/2026/02/20260212-TL-cultural-sensitivity-wedding-coordinators.jpg)