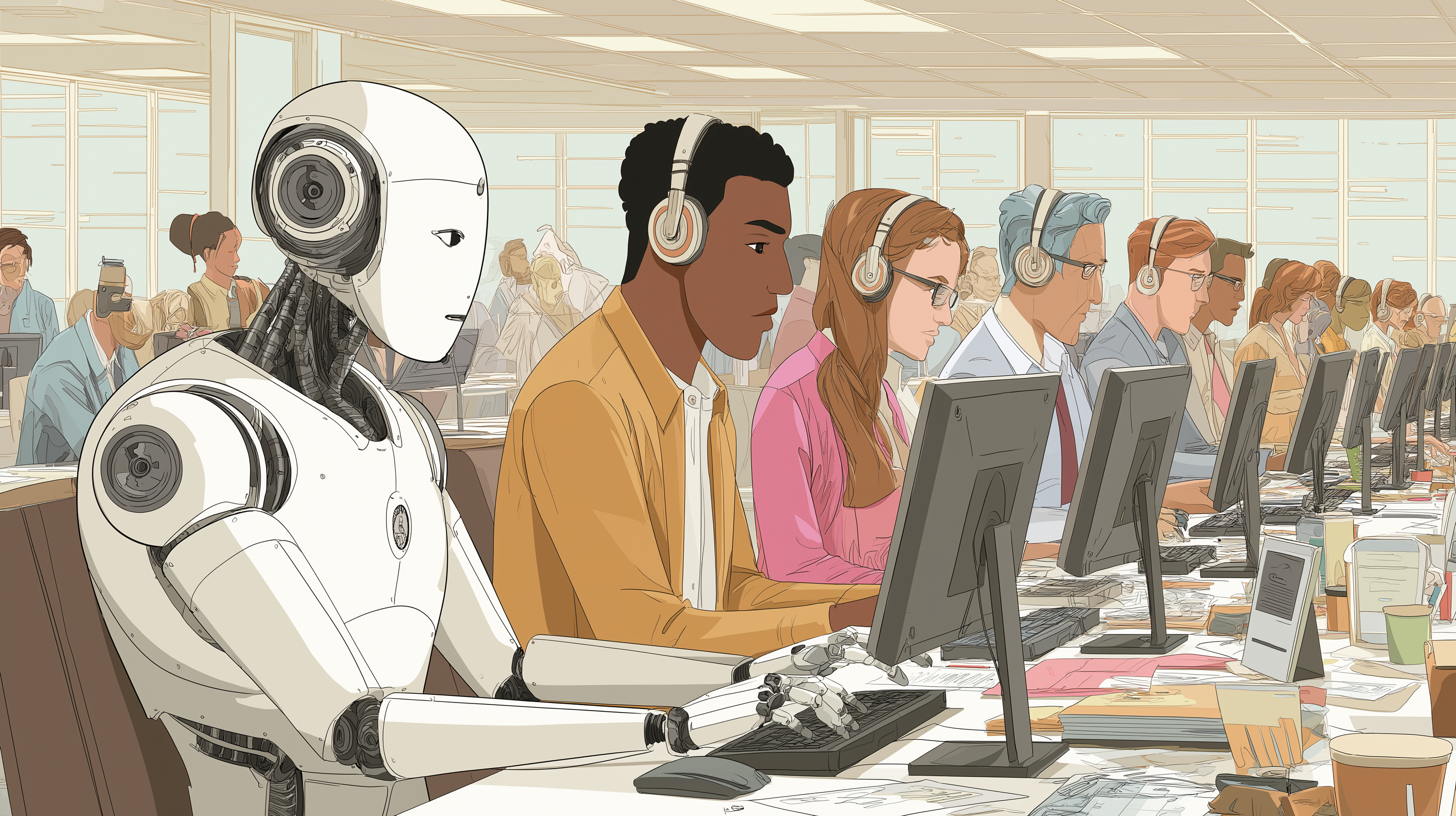

The AI narrative has principally been dominated by mannequin efficiency on key trade benchmarks. However as the sector matures and enterprises look to attract actual worth from advances in AI, we’re seeing parallel analysis in methods that assist productionize AI functions.

At VentureBeat, we’re monitoring AI analysis that may assist perceive the place the sensible implementation of know-how is heading. We’re trying ahead to breakthroughs that aren’t simply in regards to the uncooked intelligence of a single mannequin, however about how we engineer the methods round them. As we strategy 2026, listed here are 4 developments that may characterize the blueprint for the subsequent technology of sturdy, scalable enterprise functions.

Continuous studying

Continuous studying addresses one of many key challenges of present AI fashions: educating them new data and expertise with out destroying their present data (sometimes called “catastrophic forgetting”).

Historically, there are two methods to unravel this. One is to retrain the mannequin with a mixture of outdated and new data, which is dear, time-consuming, and very sophisticated. This makes it inaccessible to most firms utilizing fashions.

One other workaround is to supply fashions with in-context data via methods equivalent to RAG. Nevertheless, these methods don’t replace the mannequin’s inner data, which might show problematic as you progress away from the mannequin’s data cutoff and details begin conflicting with what was true on the time of the mannequin’s coaching. In addition they require numerous engineering and are restricted by the context home windows of the fashions.

Continuous studying allows fashions to replace their inner data with out the necessity for retraining. Google has been engaged on this with a number of new mannequin architectures. Considered one of them is Titans, which proposes a distinct primitive: a discovered long-term reminiscence module that lets the system incorporate historic context at inference time. Intuitively, it shifts some “studying” from offline weight updates into a web-based reminiscence course of, nearer to how groups already take into consideration caches, indexes, and logs.

Nested Studying pushes the identical theme from one other angle. It treats a mannequin as a set of nested optimization issues, every with its personal inner workflow, and makes use of that framing to deal with catastrophic forgetting.

Normal transformer-based language fashions have dense layers that retailer the long-term reminiscence obtained throughout pretraining and a focus layers that maintain the fast context. Nested Studying introduces a “continuum reminiscence system,” the place reminiscence is seen as a spectrum of modules that replace at completely different frequencies. This creates a reminiscence system that’s extra attuned to continuous studying.

Continuous studying is complementary to the work being performed on giving brokers short-term reminiscence via context engineering. Because it matures, enterprises can count on a technology of fashions that adapt to altering environments, dynamically deciding which new data to internalize and which to protect in short-term reminiscence.

World fashions

World fashions promise to offer AI methods the flexibility to grasp their environments with out the necessity for human-labeled knowledge or human-generated textual content. With world fashions, AI methods can higher reply to unpredictable and out-of-distribution occasions and turn into extra strong towards the uncertainty of the true world.

Extra importantly, world fashions open the way in which for AI methods that may transfer past textual content and clear up duties that contain bodily environments. World fashions attempt to study the regularities of the bodily world straight from statement and interplay.

There are completely different approaches for creating world fashions. DeepMind is constructing Genie, a household of generative end-to-end fashions that simulate an surroundings so an agent can predict how the surroundings will evolve and the way actions will change it. It takes in a picture or immediate together with consumer actions and generates the sequence of video frames that replicate how the world adjustments. Genie can create interactive environments that can be utilized for various functions, together with coaching robots and self-driving vehicles.

World Labs, a brand new startup based by AI pioneer Fei-Fei Li, takes a barely completely different strategy. Marble, World Labs’ first AI system, makes use of generative AI to create a 3D mannequin from a picture or a immediate, which might then be utilized by a physics and 3D engine to render and simulate the interactive surroundings used to coach robots.

One other strategy is the Joint Embedding Predictive Structure (JEPA) espoused by Turing Award winner and former Meta AI Chief Yann LeCun. JEPA fashions study latent representations from uncooked knowledge so the system can anticipate what comes subsequent with out producing each pixel.

JEPA fashions are way more environment friendly than generative fashions, which makes them appropriate for fast-paced real-time AI functions that must run on useful resource constrained gadgets. V-JEPA, the video model of the structure, is pre-trained on unlabeled internet-scale video to study world fashions via statement. It then provides a small quantity of interplay knowledge from robotic trajectories to help planning. That mixture hints at a path the place enterprises leverage considerable passive video (coaching, inspection, dashcams, retail) and add restricted, high-value interplay knowledge the place they want management.

In November, LeCun confirmed that he will probably be leaving Meta and will probably be beginning a brand new AI startup that may pursue “methods that perceive the bodily world, have persistent reminiscence, can purpose, and may plan advanced motion sequences.”

Orchestration

Frontier LLMs proceed to advance on very difficult benchmarks, typically outperforming human consultants. However with regards to real-world duties and multi-step agentic workflows, even robust fashions fail: They lose context, name instruments with the improper parameters, and compound small errors.

Orchestration treats these failures as methods issues that may be addressed with the precise scaffolding and engineering. For instance, a router chooses between a quick small mannequin, an even bigger mannequin for more durable steps, retrieval for grounding, and deterministic instruments for actions.

There are actually a number of frameworks that create orchestration layers to enhance effectivity and accuracy of AI brokers, particularly when utilizing exterior instruments. Stanford's OctoTools is an open-source framework that may orchestrate a number of instruments with out the necessity to fine-tune or modify the fashions. OctoTools makes use of a modular strategy that plans an answer, selects instruments, and passes subtasks to completely different brokers. OctoTools can use any general-purpose LLM as its spine.

One other strategy is to coach a specialised orchestrator mannequin that may divide labor between completely different elements of the AI system. One such instance is Nvidia’s Orchestrator, an 8-billion-parameter mannequin that coordinates completely different instruments and LLMs to unravel advanced issues. Orchestrator was educated via a particular reinforcement studying method designed for mannequin orchestration. It could inform when to make use of instruments, when to delegate duties to small specialised fashions, and when to make use of the reasoning capabilities and data of huge generalist fashions.

One of many traits of those and different related frameworks is that they’ll profit from advances within the underlying fashions. In order we proceed to see advances in frontier fashions, we are able to count on orchestration frameworks to evolve and assist enterprises construct strong and resource-efficient agentic functions.

Refinement

Refinement methods flip “one reply” right into a managed course of: suggest, critique, revise, and confirm. It frames the workflow as utilizing the identical mannequin to generate an preliminary output, produce suggestions on it, and iteratively enhance, with out extra coaching.

Whereas self-refinement methods have been round for a number of years, we could be at some extent the place we are able to see them present a step change in agentic functions. This was placed on full show within the outcomes of the ARC Prize, which dubbed 2025 because the “Yr of the Refinement Loop” and wrote, “From an data concept perspective, refinement is intelligence.”

ARC exams fashions on sophisticated summary reasoning puzzles. ARC’s personal evaluation experiences that the highest verified refinement answer, constructed on a frontier mannequin and developed by Poetiq, reached 54% on ARC-AGI-2, beating the runner-up, Gemini 3 Deep Suppose (45%), at half the value.

Poetiq’s answer is a recursive, self-improving, system that’s LLM-agnostic. It’s designed to leverage the reasoning capabilities and data of the underlying mannequin to replicate and refine its personal answer and invoke instruments equivalent to code interpreters when wanted.

As fashions turn into stronger, including self-refinement layers will make it potential to get extra out of them. Poetiq is already working with companions to adapt its meta-system to “deal with advanced real-world issues that frontier fashions battle to unravel.”

How one can monitor AI analysis in 2026

A sensible strategy to learn the analysis within the coming 12 months is to look at which new methods can assist enterprises transfer agentic functions from proof-of-concepts into scalable methods.

Continuous studying shifts rigor towards reminiscence provenance and retention. World fashions shift it towards strong simulation and prediction of real-world occasions. Orchestration shifts it towards higher use of assets. Refinement shifts it towards sensible reflection and correction of solutions.

The winners is not going to solely choose robust fashions, they are going to construct the management airplane that retains these fashions right, present, and cost-efficient.