Specialists cite the necessity for regulatory insurance policies and a code of ethics governing AI use

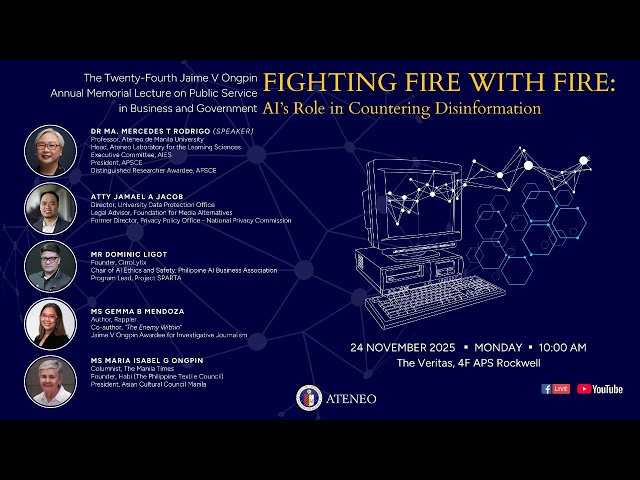

MANILA, Philippines – Synthetic intelligence (AI) can each be a possible vector for spreading and a way of preventing disinformation, based on consultants who spoke on the twenty fourth Jaime V. Ongpin Annual Memorial Lecture on the Ateneo Skilled Colleges on Monday, November 24

In her keynote speech, Professor Maria Mercedes Rodrigo, head of the Ateneo Laboratory for the Studying Sciences, stated that “AI has accelerated and democratized the capability to create deepfakes — artificial textual content, photos, audio, video of occasions that by no means passed off.”

She added, “Generative AI has the potential to inundate media with deepfakes, misinformation, and artificial content material, and the Philippines has been designated affected person zero within the context of world disinformation largely attributable to the numerous prevalence of misinformation throughout the 2016 Philippine elections.”

The examine stated 14 consultants — six trade professionals, three lecturers, three civil society members, and two members of the media — have been gathered in a collection of interviews for the aim of the examine.

Inside the trade, the 2 most typical purposes of AI have been fraud detection and anti-money laundering campaigns, in addition to customized advertising and marketing campaigns.

In her speech, Rodrigo cited the necessity for regulatory insurance policies and a code of ethics governing AI use. Trade and tutorial key informants, she stated, affirmed the necessity for moral and regulatory insurance policies to make sure AI security.

In the meantime, “customers and creators of AI purposes have to be cognizant of security, safety, and mental property implications.”

“Based mostly on our interviews, we are able to see that we as a rustic have the inner capability and experience. We’ve got the schooling and the notice, What we appear to lack is scale, and what we’d like is scale,” Rodrigo stated.

To cap off her speech, Rodrigo talked about a few of the proposed cures within the examine, which targeted on combating disinformation by way of efficient implementation of governance buildings, coverage diversifications, and collaboration throughout sectors.

These included a name for a nationwide AI governance framework, aligning with the Division of Commerce and Trade’s Nationwide AI Technique Roadmap 2.0, in addition to selling media literacy and public consciousness concerning AI as a software and a way of spreading disinformation to the unwary.

Panel of reactors

A panel of reactors was additionally available to provide remarks concerning the probabilities and pitfalls of synthetic intelligence. Alongside Rodrigo have been lawyer Jamael Jacob, director of the college’s knowledge safety workplace; Dominic Ligot, the founding father of Cirrolytix and the chair of AI ethics and security on the Philippine AI Enterprise Affiliation; and, Gemma Mendoza, Rappler’s head of digital providers and lead researcher on disinformation and platforms.

In accordance with Jacob, critics and proponents of AI each readily admit that AI isn’t excellent, and makes errors or “hallucinates.” As such, the jury continues to be out on utilizing AI to fight the unhealthy makes use of of AI.

He added that transparency was essential to ascribing duty for AI’s makes use of and any potential missteps, although requires transparency and accountability are sometimes rejected as AI’s algorithms are handled as commerce secrets and techniques by huge expertise firms.

For Ligot, in the meantime, his place is that AI is neither a savior nor a villain, and that we should deal with deepfakes as a gift operational menace moderately than a distant one. Ligot additionally stood for limiting the attain of unhealthy actors moderately than policing free expression by detecting the coordinated unfold of falsehoods. The attain of paid disinformation, he defined, vastly outpaces reality, with disinformation amplified by design and extra readily going viral.

Mendoza mentioned the accountability of tech firms to labeling faux content material. She talked about how an increase in artificial content material and AI slop — particularly deepfakes like hyperrealistic video content material — has drowned out actual content material on social media feeds. That is made worse by the existence of well-used however nonetheless little-known AI instruments to generate deepfakes.

She talked about how platforms revenue from the unfold of deepfakes, with a Reuters report mentioning that, based mostly on inner paperwork, Meta earns a fortune on fraudulent adverts by charging suspected fraudsters extra to be amplified moderately than investigating the supply of the fraud.

Mendoza added, “Whereas innovation needs to be welcomed, guard rails needs to be in place and platforms shouldn’t be allowed to revenue from content material theft. This may finally kill that wealthy market of knowledge, artwork, and tradition that AI is at present benefiting from.”

The examine by Rodrigo, Rommel Jude Ong, Karen Claire Garcia, Charisse Erinn Flores, and Johanna Marion Torres is accessible to obtain without spending a dime on this web page. It was funded by the Konrad Adenauer Stiftung Basis. – Rappler.com